Artificial Neurons Mimic Complex Brain Abilities for Next-Generation AI Computing

For decades, scientists have been investigating how to recreate the versatile computational capabilities of biological neurons to develop faster and more energy-efficient machine learning systems. One promising approach involves the use of memristors: electronic components capable of storing a value by modifying their conductance and then utilizing that value for in-memory processing.

However, a key challenge to replicating the complex processes of biological neurons and brains using memristors has been the difficulty in integrating both feedforward and feedback neuronal signals. These mechanisms underpin our cognitive ability to learn complex tasks using rewards and errors.

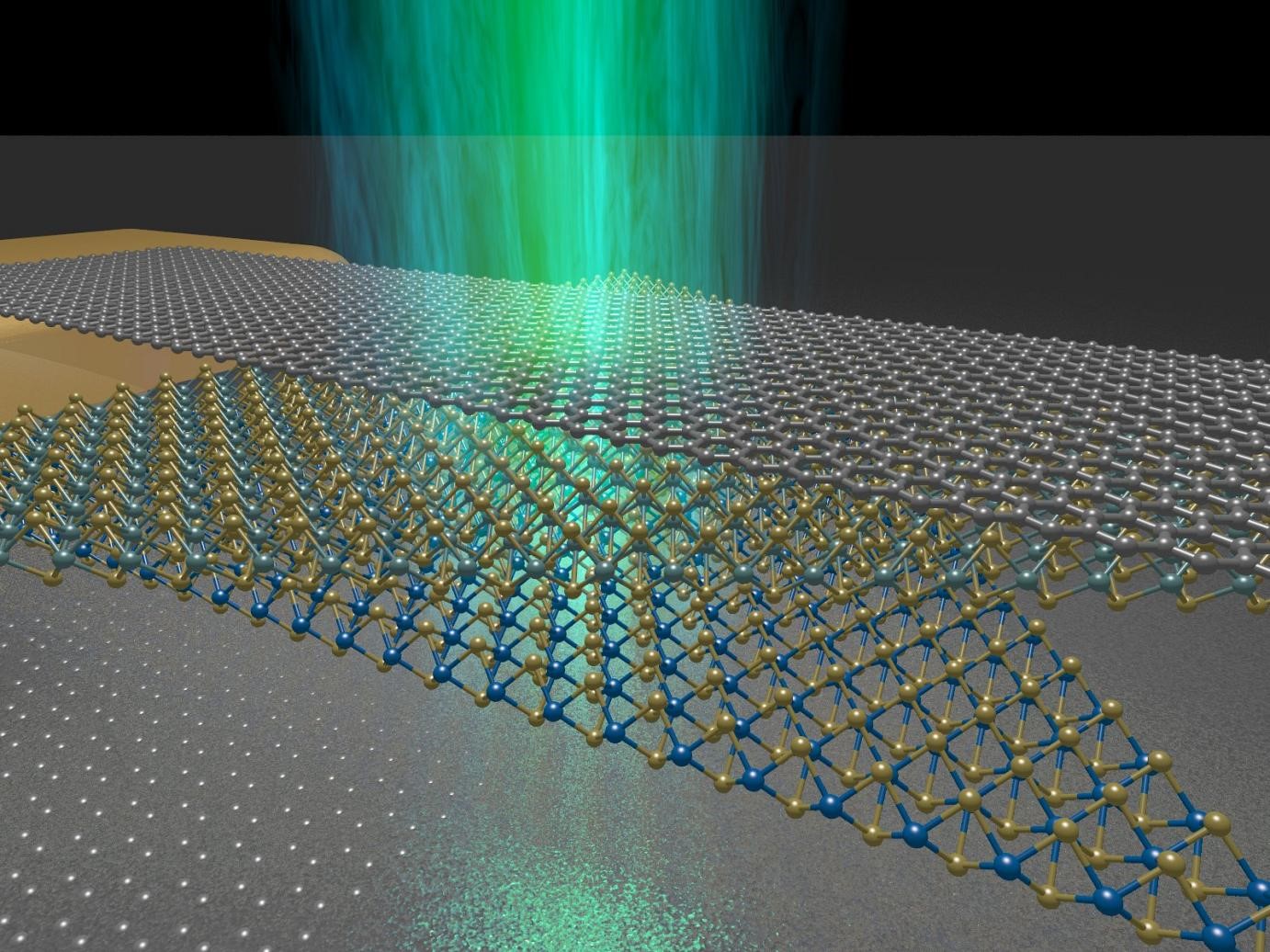

A team of researchers at The University of Texas at Austin, University of Oxford and IBM Research Europe hit an important milestone: the development of atomically thin artificial neurons created by stacking two-dimensional (2D) materials. The results have been published in Nature Nanotechnology.

“The use of such 2D structures in computing has been spoken about for years, but only now are we finally seeing the payoff after spending over seven years in development,” Jamie Warner, a professor in the Cockrell School of Engineering’s Walker Department of Mechanical Engineering and director of the Texas Materials Institute. “By assembling wafer-scale 2D monolayers into complex ultrathin optoelectronic devices, this will enable the start of new information processing approaches using 2D materials based on industrially scalable fabrication methods.”

In the study, the researchers expanded the functionality of the electronic memristors by making them responsive to optical as well as electrical signals. This enabled the simultaneous existence of separate feedforward and feedback paths within the network. The advancement allowed the team to create winner-take-all neural networks: computational learning programs with the potential for solving complex problems in machine learning, such as unsupervised learning in clustering and combinatorial optimization problems.

As the advancement of artificial intelligence applications has grown exponentially, the computational power required has outpaced the development of new hardware based on traditional processors. There is an urgent need to research new techniques like this.

“This entire field is super-exciting, as materials innovations, device innovations, and novel insights into how they can be creatively applied all need to come together,” said Professor Harish Bhaskaran, of the Advanced Nanoscale Engineering Laboratory, University of Oxford, and at IBM Research Zurich laboratory, who led the project. “This work represents a new toolkit, exploring the power of 2D materials, not in transistors, but for novel computing paradigms.”

2D materials are made up of just a few layers of atoms, and this fine scale gives them various exotic properties, which can be fine-tuned depending on how the materials are layered. In this study, the researchers used a stack of three 2D materials – graphene, molybdenum disulfide and tungsten disulfide – to create a device that shows a change in its conductance depending on the power and duration of light/electricity that is shone on it.

Unlike digital storage devices, these devices are analog and operate similarly to the synapses and neurons in our biological brain. The analog feature allows for computations, where a sequence of electrical or optical signals sent to the device produces gradual changes in the amount of stored electronic charge. This process forms the basis for threshold modes for neuronal computations, analogous to the way our brain processes a combination of excitatory and inhibitory signals.

The findings are more of exploratory nature than actual demonstrations at the system level, the researchers say. This level of computing won’t show up in mobile phones in the next two years. However, findings like these are important for further development of neuromorphic engineering, enabling scientists to better emulate and comprehend the brain.

“Besides the potential applications in AI hardware, these current proof-of-principle results demonstrate an important scientific advancement in the wider fields of neuromorphic engineering and algorithms, enabling us to better emulate and comprehend the brain,” said Ghazi Sarwat Syed, a research staff member at IBM Research Europe-Switzerland.

The work was funded through grants from the European Union’s Horizon 2020 Research and Innovation Programme, the Engineering and Physical Sciences Research Council and the European Research Council.